#geom_label(aes(label = n, Job_title_c, y = 1500), data = count_df) + Ggplot(aes(x = Job_title_c, y = Mean_salary)) + Job_title_c = fct_reorder(Job_title_c, desc(-Mean_salary))) %>% Mutate(Mean_salary = rowMeans(cbind(Low_salary, High_salary), na.rm = T), Summarize_if(is.numeric, ~ mean(.x, na.rm = TRUE)) %>% Select(Job_title_c, Low_salary, High_salary, Job_type) %>% We can plot the same graph but instead of grouping by company we can group by job title:įilter(Low_salary > 1600) %>% # To remove internships and freelance works This is partly due because I didn’t make distinction between the different data science jobs (data scientist, data analyst, data engineer, senior or lead). As you can see the salaries can vary a lot depending on the company. The median monthly salary is around 3700 euros. Geom_hline(aes(yintercept = median(Mean_salary)), lty=2, col='red', alpha = 0.7) + Geom_linerange(aes(ymin = Low_salary, ymax = High_salary)) +

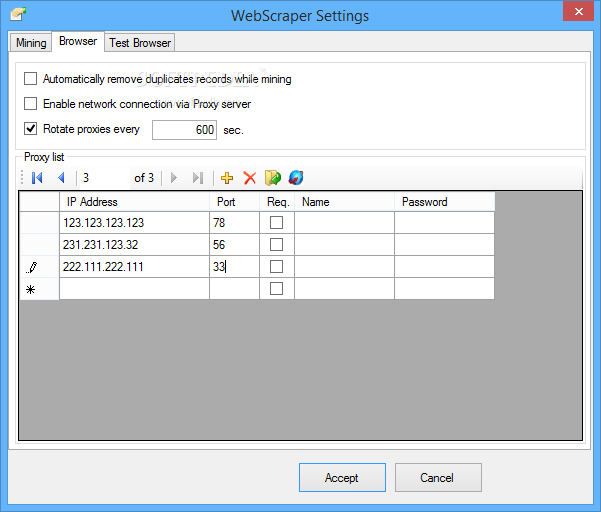

Lapply(FUN = tidy_salary) %>% # Function to clean the data related to salary Html_elements(css = ".mosaic-provider-jobcards. Tidy_job_desc() # Function to clean the textual data related to job desc. Lapply(FUN = tidy_comploc) %>% # Function to clean the textual data Html_element(css = ".company_location")%>% Html_elements(css = ".resultContent") %>% # Creating URL link corresponding to the first 40 pages Once I have the list, the only thing left is to loop over all the URLs with some delay (good practice for web-scraping), collect the data and clean it with custom functions (at the end of the post): However, I am interested in all the job posts and I need to access the other pages ! After navigating through the first 3 pages of listed jobs, I remarked a pattern in the URL address (valid at the time of writing), this means that with a line of code, I can produce a list containing the URLs for the first 40 pages. Optimizing my search for Data scientist jobs by scraping Indeed with R | R-bloggers % # Clean the data to only get numbersįor now, we can only scrape the data from the first page.

0 kommentar(er)

0 kommentar(er)